I’ve written before about using WeSay to collect language data, and at one point I even wrote up a proposal for features I think it would be nice to have there –specifically targeted at orthography development, but the same tools could be used for spell checking (lining up similar profile words, that is, not coming up with a list of correctly spelled words). Anyway, it doesn’t look like that proposal is going anywhere, so I thought I’d give it another try.

The Basic Problem

- I’m working in an increasingly large number of languages (five people representing three languages were in my office most of this morning), and I’m looking to see that trend continue (looking to the 60 unwritten languages in eastern DRC).

- I am one person, and can only work on one language at a time (however often the language may change throughout the day).

- The best tool we have for sorting and manipulating large amounts of lexical data (FLEx) is great at what it does, but is inaccessible to most of the people I work with (it was made for linguists, after all. :-))

- So I am left with doing all the work with each guy in FLEx (see #2), or else finding a tool that can be more easily used by the people in #1.

Getting from Data collection to an Orthography

For a number of tasks (word collection), WeSay does exactly what I want. Since most of these people are touching a computer for the first time, let’s get rid of as much of the complexity and room for error as possible. Do one thing at a time, in a constrained environment. But once I’ve collected a wordlist in WeSay, I still need to

- Parse the roots out of the forms collected (I can collect plural forms in WeSay now, but we still need to get the full word forms into a field that should be full word forms (e.g., <citation>), and just roots in the lexical-unit field. In case it isn’t obvious, a lot of the phonological analysis depends on the structure of the root — the first root consonant is more important to our analysis that the first word consonant, so we need to be able to sort/filter on it.

- Sort and filter the word forms, so we see just one kind of thing at a time (we don’t want to see if kupaka and kukapa have the same ‘p’, since the difference in word position might cause such a difference that is irrelevant to the phonemic system (English speakers pronounce p’s differently in different environments, but use one letter for them all — did you know?).

- Go through each controlled list of words, to see where the current writing system (we use a national language to get us started) is not making enough distinctions, and where it is making too many.

- Mark changes on the appropriate words, returning the corrected information to the database.

While FLEx can handle all these tasks fine (however slowly at times), if I’m going to help other people move forward in their own language development, I need to find another tool (or tool set). I have got these guys quite happily working in WeSay (yes, there are kinks, but still we all are often working 3-4 times as fast as if I was typing everything myself, since there are more of us typing), but I need to get ready for these next steps, in a way that allows us to build on this momentum, rather than tell everyone but one team to go home until I have more time.

An Attempt

So I’ve been playing with XForms lately (for a lot of other reasons), and I’ve toyed with the idea of getting at and manipulating the LIFT file by another engine. The idea of being able to write a simple form to control the complexity of the underlying XML, and to manipulate it and save back to XML, was very exciting. I even have one form (for collecting noun class permutation examples) deployed. Then I read about the post describing the death of the Firefox XForms extension, and I thought surely, there must be a better way to do this, and I’m sure someone out there knows what it is. So I’ll spend some time outlining exactly what I’d like to see, and maybe someone will know what to do to make it happen.

A Proposal

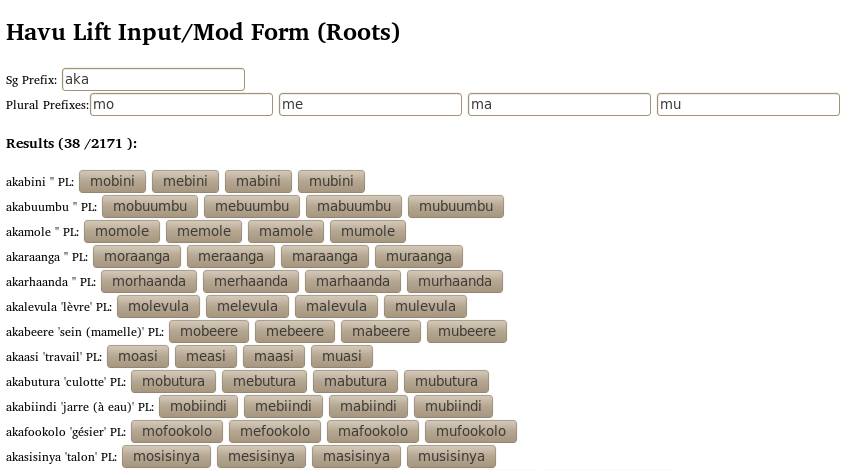

I took some screenshots of some xforms I did, displayed in firefox. Here’s the first form, for parsing roots (Havu is the name of the language I’m using to test this, and one of the next who will be looking for this to work):

The important aspects are

- The prefix of the original citation form (sg here), which filters through the database, allowing us to work on words that are (probably) just of one noun class at a time.

- A number of possible plural prefixes, to control the potential output forms

- The originally input word form, with gloss (where present), to clearly identify each word.

- A number of buttons which allow a non-linguist to simply push a button to input the plural form and parse the root (probably including a “none of the above,” which would skip processing for this word).

- Under the hood processing which would, on a click:

- copy the original form into a citation form field in the database (potentially a new node in the lift XML),

- remove the prefix from the original form, and

- input the plural form into a field for the plural form (again, potentially a new node in the lift XML).

It would probably be better to show the user one word at a time, rather than the list in this screen-shot, but I included it all here, to show how the removal of the prefix, and the application of the new prefix, would need to apply to the form of each entry. Also, it would be nice if the form could be adapted for suffixing languages.

What I had so far for an example trigger (in XForms) would be

<xf:trigger><xf:label><xf:output value=”concat(‘mo’, substring(lexical-unit/form[@lang=’hav’]/text[starts-with(.,

‘aka’)], 4))” /></xf:label>

<xf:action ev:event=”DOMActivate”>

<xf:insert context=”.” origin=”instance(‘init’)/citation” />

<xf:setvalue ref=”./citation/form/text” value=“lexical-unit/form[@lang=’hav’]/text[starts-with(., ‘aka’)]”/>

<xf:setvalue ref=“lexical-unit/form[@lang=’hav’]/text[starts-with(., ‘aka’)]” value=“substring(lexical-unit/form[@lang=’hav’]/text[starts-with(., ‘aka’)], string-length(‘aka’) +1)”/>

<xf:send submission=”Save”/>

</xf:action>

</xf:trigger>

As you can see, the value of the prefix is hard-coded here, since I haven’t been able to get variables to work. also, the setvalue expressions don’t really behave (neither node-creation, not setting the right value for an existing node). It’s hard to tell what is a limitation of XForms, and what is a limitation of the Firefox extension –I tried another XForms renderer, but no luck so far… Needless to say, this is not what I do best, so help, anyone?

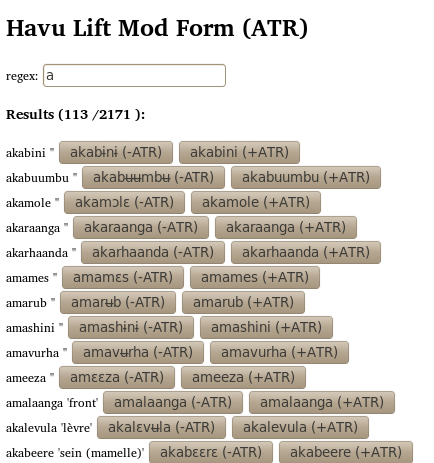

The next form I’d like sets ATR values for whole words (usually the harmony around here is fairly strict, so it would help with a lot, but not all, vowel questions):

This form is similar to the above, in that I’m looking for a simple regular expression (or a more complicated on, if possible. :-)) to control the data we’re looking at at once, and a binary choice for which vowel group the word belongs to (showing the new word form on the button). A choice of one or another would set the word form accordingly.

Same caveats above about the list of words on a page, and should probably have a “neither” button for when a word doesn’t obey strict vowel harmony, which would bypass processing for that word.

The trigger I had so far (for the -ATR button, reverse for the +ATR) was

<xf:trigger><xf:label><xf:output value=”translate(lexical-unit/form[@lang=’hav’]/text, ‘aeiou’, ‘aɛɨɔʉ’)”/>(-ATR)</xf:label>

<xf:action ev:event=”DOMActivate”>

<xf:setvalue context=”.” value=”translate(lexical-unit/form[@lang=’hav’]/text, ‘aeiou’, ‘aɛɨɔʉ’)”></xf:setvalue>

<xf:send submission=”Save”/>

</xf:action>

</xf:trigger>

The translation includes a>a, but could be modified depending on what /a/ does in a given language (especially if there are 10 vowels).

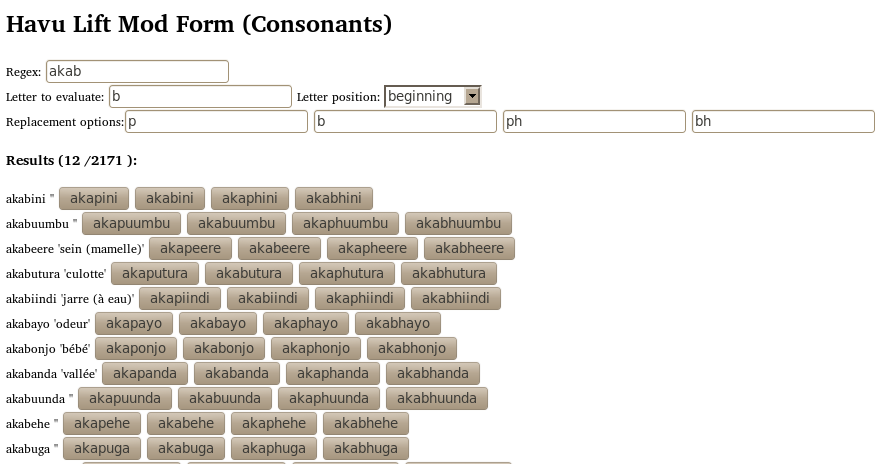

The third and final form, where we would spend the bulk of our time, might look like this:

Here we have:

- The ubiquitous regex to control the data we’re looking at.

- The letter that is being evaluated (we’re only analyzing one written form, be it digraph or not, at a time).

- The position to find that letter (something like first or second in the root, would probably be good enough).

- The options for replacing it (buttons labeled with the new forms), in case a word in the list uses a sound that doesn’t sound the same as the sound in that position in the rest of the words in the list.

Again, a choice of new word form would write the new form to the database (at which point the word would likely disappear from the list, until the regexp matched the new form of that word). It would be nice if the same replacement could be made in the citation and plural forms, presuming the same sounds and written letters would apply. I’m not sure if it would be necessary to devise two forms, one for consonants, and another for vowels. It would depend (at least) on how the regex worked, since the underlying principle is the same for consonants and vowels — look at one thing in one position at a time, and mark each one as the same or different, compared to the other things on the list.

Specifications

Some things we would need to make this tool useful:

- Read and modify LIFT format in a predictable and non-destructive way (making all and only the changes we’re looking for, to the fields we’re looking at, leaving everything else alone). This is the format we’re keeping all our lexical data in, and we need to play nice with a number of other programs that use the same data structure standard.

- Use of regular expressions. For the root parsing tool, a simple prefix (or suffix) filter would do, but for the others it would be nice to be able to constrain syllable type, as well as position in the word (i.e., kupapata should not appear on the same list as kupapa, even if we’re looking at first ‘p’ in the root, since the second is a longer root). In FLEx, I use expressions like these (more included below), though a simpler format could do, if that kind of power were not possible. Ideally, these expressions would be put into a config file, and the user would only see the label (I have these all done, and can come up with more if needed).

- Cross-platform. We run most of our work on BALSA, so it would need to be able to run on at least Linux, which provides BALSA’s OS.

- Simple UI. It is probably not possible to overstate computer illiteracy we are dealing with here. People are eager to learn, and often capable to learn, but the less training we need, and the less room a given task has to screw everything else up, the better (WeSay‘s UI is a great model).

- Shareable. Even if not open sourced, any tool we might use here needs to be legally put on every computer we and our colleagues use, and they don’t often have the money or access to internet to buy licenses.

- Supportable. As hard as we try to keep the possibility of errors out of our workplace, it happens. Today we had two technological problems, each of which required non-significant amounts of my time. If I weren’t here (or if the problems had been beyond me), the teams would have been stuck. The simpler and more accessible (or absolutely error free!) the technology is under the hood, the more likely someone local will be able to deal with problems that arise in a timely manner.

Anyway, there it is.

Examples of Useful Regular Expressions for Filtering Lexical Data

The following are output by a script, which takes as input the kinds of graphs (e.g., d, t, ng’, and ngy) used in a given language’s writing system. For instance, these expressions do not allow ‘rh’ as a single consonant, but those I did for another language does. Similarly, these are based on ten particular vowel letters, which could also be changed for a given language.

^([mn]{0,1})([[ptjfvmlryh]|[bdgkcsznw][hpby]{0,1}])([aiɨuʉeɛoɔʌ])([́̀̌̂]{0,1})([mn]{0,1})([[ptjfvmlryh]|[bdgkcsznw][hpby]{0,1}])([aiɨuʉeɛoɔʌ])([́̀̌̂]{0,1})$

(all CVCV –short vowels only)

^([mn]{0,1})([[ptjfvmlryh]|[bdgkcsznw][hpby]{0,1}])([aiɨuʉeɛoɔʌ]{1,2})([́̀̌̂]{0,1})([mn]{0,1})([[ptjfvmlryh]|[bdgkcsznw][hpby]{0,1}])([aiɨuʉeɛoɔʌ]{1,2})([́̀̌̂]{0,1})$

(all CVCV –long vowels and dypthongs OK)

^([mn]{0,1})([[ptjfvmlryh]|[bdgkcsznw][hpby]{0,1}])([aiɨuʉeɛoɔʌ]{1,2})([́̀̌̂]{0,1})12([aiɨuʉeɛoɔʌ]{1,2})([́̀̌̂]{0,1})$

(all CVCV with C1=C2 –counting prenasalization)

^([mn]{0,1})([[ptjfvmlryh]|[bdgkcsznw][hpby]{0,1}])([aiɨuʉeɛoɔʌ])([́̀̌̂]{0,1})([mn]{0,1})([[ptjfvmlryh]|[bdgkcsznw][hpby]{0,1}])3([́̀̌̂]{0,1})$

(all CVCV with V1=V2 –no long V’s or dypthongs)

^([mn]{0,1})([[ptjfvmlryh]|[bdgkcsznw][hpby]{0,1}])(a)([mn]{0,1})([[ptjfvmlryh]|[bdgkcsznw][hpby]{0,1}])3([́̀̌̂]{0,1})$

(all CVCV with V1=V2=a)